Importing Models

This guide will show how to import models from OpenAI API compatible providers and also from your local Ollama

Catalyst comes with a default model called VooV that allows to perform basic tasks. You could also add your own local models either in your local machine or on a local network.

Many AI models support OpenAI API compatibility. This is what allows Catalyst to communicate with them.

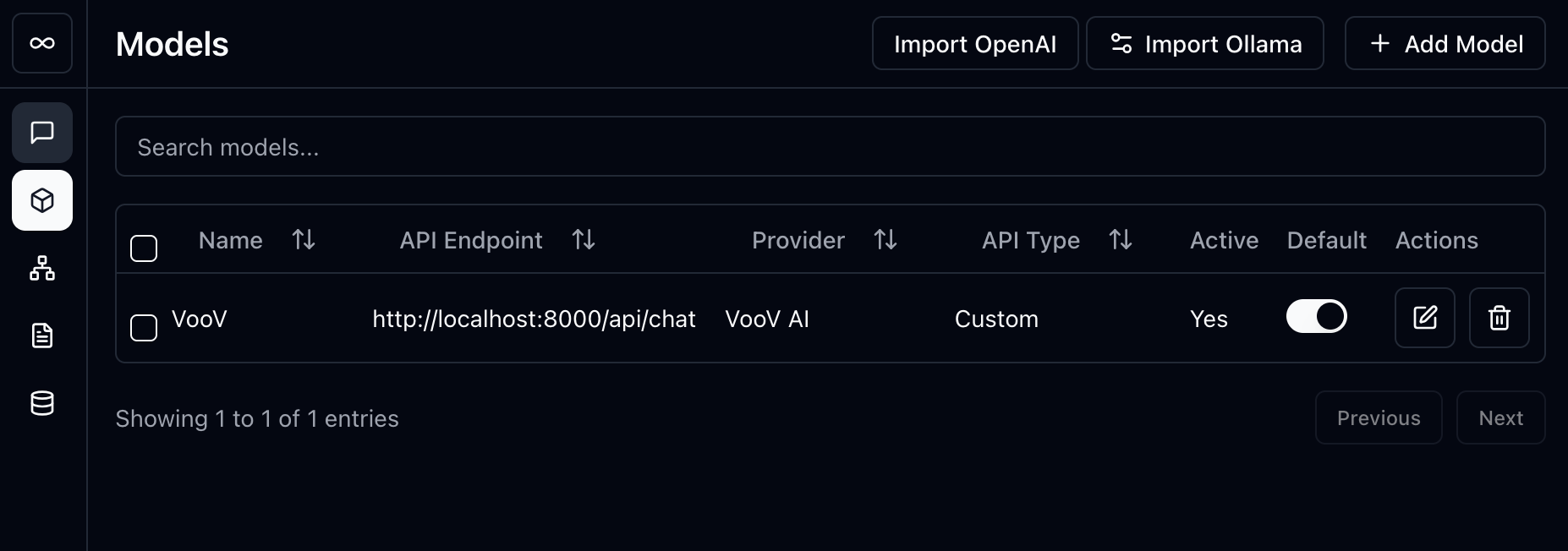

Models Page

Section titled “Models Page”To get to Models page, navigate to

https://catalyst.voov.ai/models

or click on the models icon on the menu

Initially you will see only one model

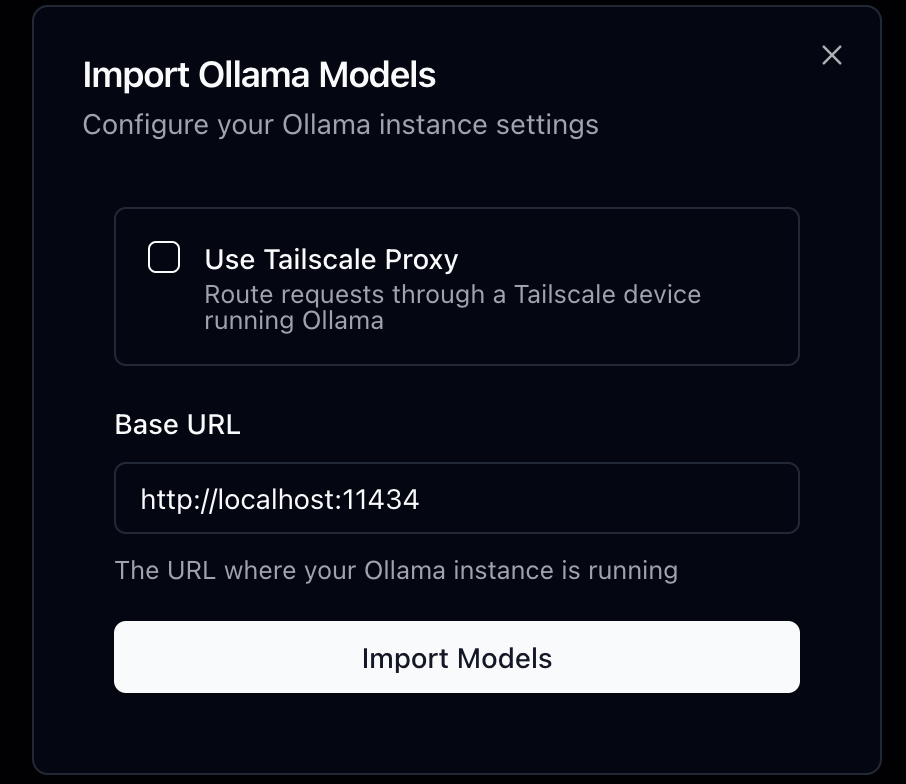

If you already have Ollama running locally, you can click on Import Ollama

Click on Import Models.

Catalyst will make a connection to your localhost on port 11434 to import models.

If Ollama is not running or it does not allow CORS, this will fail.

Please follow the guide on how to allow ollama CORS